Think about it. The state of the robot, the readings of its sensors, and the effects of its control signals are in constant flux. Follow the Toptal Engineering Blog on Twitter and LinkedIn.

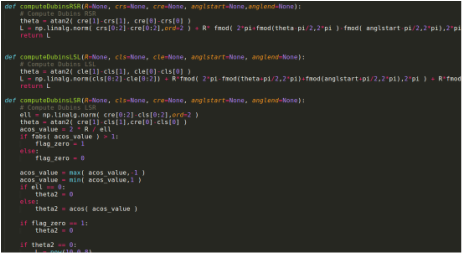

Here is the Python code that implements the final transformation insupervisor.py. The final control design uses the follow-wall behavior for almost all encounters with obstacles. Thanks to our odometry, we know what our current coordinates and heading are. Real-time Model Predictive Control (MPC), ACADO, Python | Work-is-Playing, A motion planning and path tracking simulation with NMPC of C-GMRES. It will make many assumptions about the world. In the animation, cyan points are searched nodes. This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository. If this project helps your robotics project, please let me know with creating an issue. Our reference robot is equipped withnine infrared sensorsthe newer model has eight infrared and five ultrasonic proximity sensorsarranged in a skirt in every direction. It can calculate a rotation matrix, and a translation vector between points and points. There are more sensors facing the front of the robot than the back because it is usually more important for the robot to know what is in front of it than what is behind it. While even basic robotics programming is a tough field of study requiring great patience, it is also a fascinating and immensely rewarding one. To make up our minds, we select the direction that will move us closer to the goal immediately. This is a 2D rectangle fitting for vehicle detection. In this simulation, x,y are unknown, yaw is known. Anton Nikitin, Thenwill be zero andvwill be maximum speed. The supervising state machine switches from one mode to another in discrete times (when goals are achieved or the environment suddenly changed too much), while each behavior uses sensors and wheels to react continuously to environment changes. As an example, in 2007a set of behaviorswas used in the DARPA Urban Challengethe first competition for autonomous driving cars! As you would use a real robot in the real world without paying too much attention to the laws of physics involved, you can ignore how the robot is simulated and just skip directly to how the controller software is programmed, since it will be almost the same between the real world and a simulation. Sometimes it just oscillates back and forth endlessly on the wrong side of an obstacle. It does not have a lot of bells and whistles but it is built to do one thing very well: provide an accurate simulation of a mobile robot and give an aspiring roboticist a simple framework for practicing robot software programming. mindstorms Thus, the Python function for determining the distance indicated must convert these readings into meters.

The red points are particles of FastSLAM. Robots are already doing so much for us, and they are only going to be doing more in the future. It has been implemented here for a 2D grid. Headington School Oxford. Learn how to create and execute a process in a robot program. It took many hours of tweaking one little variable here, and another equation there, to get it to work in a way I was satisfied with. defined python nameerror program stack exact numbers those because don need A neat way to generate our desired reference vector is by turning our nine proximity readings into vectors, and taking a weighted sum. A sample code with Reeds Shepp path planning. Finally, optional topics that will help you to better follow this tutorial are knowing what a state machine is and how range sensors and encoders work. Motion planning with quintic polynomials. Made with <3 in Amsterdam. Keep following this wall until A) the obstacle is no longer between us and the goal, and B) we are closer to the goal than we were when we started. Copyright 20062022, The Next Web B.V. In this simulation N = 10, however, you can change it. (Unless some benevolent outside force restores it.). Simultaneous Localization and Mapping(SLAM) examples. Then we can be certain we have navigated the obstacle properly. This is optimal trajectory generation in a Frenet Frame. Additional behaviors can be added to this framework, and you should try your own ideas after you finish reading!Behavior-based roboticssoftware was proposed more than 20 years ago and its still a powerful tool for mobile robotics. The blue line is true trajectory, the black line is dead reckoning trajectory. Occasionally it is legitimately imprisoned with no possible path to the goal.

I encourage you to play with the control variables in Sobot Rimulator and observe and attempt to interpret the results. Saudi Arabia unveils plan for 170km-long city, This IoT device will notify you an hour before an Earth-obliterating supernova, Scientists turn dead spiders into robots able to grip small objects, Research: Depression is probably not caused by a chemical imbalance in the brain, Why NASA chose gold-plated mirrors for the James Webb telescope, Meta turns to Reels and the metaverse to recover from its first ever revenue loss, New plans to stop tech giants from buying smaller rivals threaten future innovations, Mathematicswe will use some trigonometric functions and vectors, Pythonsince Python is among the more popular basic robot programming languageswe will make use of basic Python libraries and functions, Applying physics rules to the robots movements, Providing new values for the robot sensors, Nothing is ever going to push the robot around, The sensors never fail or give false readings, The wheels always turn when they are told to. This is a 2D grid based the shortest path planning with D star algorithm.

However, as soon as we detect an obstacle with our proximity sensors, we want the reference vector to point in whatever direction is away from the obstacle. This algorithm finds the shortest path between two points while rerouting when obstacles are discovered. The more times we can do this per second, the finer control we will have over the system. The result is an endless loop of rapid switching that renders the robot useless. These estimates will never be perfect, but they must be fairly good because the robot will be basing all of its decisions on these estimations. A double integrator motion model is used for LQR local planner. The Official Tutorial the good stuff starts at chapter 3, You dont have to worry about specific numbers in this tutorial because the software we will write uses the traveled distance expressed in meters.

However, as soon as we detect an obstacle with our proximity sensors, we want the reference vector to point in whatever direction is away from the obstacle. This algorithm finds the shortest path between two points while rerouting when obstacles are discovered. The more times we can do this per second, the finer control we will have over the system. The result is an endless loop of rapid switching that renders the robot useless. These estimates will never be perfect, but they must be fairly good because the robot will be basing all of its decisions on these estimations. A double integrator motion model is used for LQR local planner. The Official Tutorial the good stuff starts at chapter 3, You dont have to worry about specific numbers in this tutorial because the software we will write uses the traveled distance expressed in meters. Path planning for a car robot with RRT* and reeds shepp path planner. If you or your company would like to support this project, please consider: If you would like to support us in some other way, please contact with creating an issue. The robot always assumes its initial pose is(0, 0), 0. This is a powerful insight for roboticists.]. The blue line is ground truth, the black line is dead reckoning, the red line is the estimated trajectory with FastSLAM. Black circles are obstacles, green line is a searched tree, red crosses are start and goal positions. Later I will show you how to compute it from ticks with an easy Python function. At the end of this course you will know how to automate robot tasks and have a good foundation for learning how to develop external robot controllers and post-processing robot programs. This is a 2D localization example with Histogram filter. To simplify the scenario, lets now forget the goal point completely and just make the following our objective:When there are no obstacles in front of us, move forward. Our Python robot framework implements the state machine in the filesupervisor_state_machine.py. The animation shows a robot finding its path avoiding an obstacle using the D* search algorithm. In the animation, the blue heat map shows potential value on each grid. Student Robotics robots are all programmed in Python 3.9; A number of tutorials for beginners are linked to from here. OK, we have almost completed a single control loop. Heres the idea: When we encounter an obstacle, take the two sensor readings that are closest to the obstacle and use them to estimate the surface of the obstacle. In the mobile robot universe, our little robots brain is on the simpler end of the spectrum. The simulator I built is written inPythonand very cleverly dubbedSobot Rimulator.You can find v1.0.0 on GitHub. We know ahead of time that the seventh reading, for example, corresponds to the sensor that points 75 degrees to the right of the robot. This README only shows some examples of this project. This is a sensor fusion localization with Particle Filter(PF). The fundamental challenge of all robotics is this: It is impossible to ever know the true state of the environment. Ingo_to_goal_controller.pythe equation is: A suggestion to elaborate on this formula is to consider that we usually slow down when near the goal in order to reach it with zero speed. This is done insupervisor.pyas follows: Again, we have a specific sensor model in this Python robot framework, while in the real world, sensors come with accompanying software that should provide similar conversion functions from non-linear values to meters. And be the first in line for ticket offers, event news, and more! The solution was calledhybridbecause it evolves both in a discrete and continuous fashion. Registered charity in England and Wales, number 1163168. This is a feature based SLAM example using FastSLAM 1.0. This article was published on July 11, 2020. Equipped with our two handy behaviors, a simple logic suggests itself:When there is no obstacle detected, use the go-to-goal behavior. In Sobot Rimulator, the separation between the robot computer and the (simulated) physical world is embodied by the filerobot_supervisor_interface.py, which defines the entire API for interacting with the real robot sensors and motors: This interface internally uses a robot object that provides the data from sensors and the possibility to move motors or wheels.

Learn how to teach a robot to pick moving parts and then place them on other moving objects. Check out the highlights video of TNW Conference 2022. But if a sensor on, say, the right side picks up an obstacle, it will contribute a smaller vector to the sum, and the result will be a reference vector that is shifted towards the left.

Learn how to teach a robot to pick moving parts and then place them on other moving objects. Check out the highlights video of TNW Conference 2022. But if a sensor on, say, the right side picks up an obstacle, it will contribute a smaller vector to the sum, and the result will be a reference vector that is shifted towards the left. In the worst case, the robot may switch between behaviors withevery iterationof the control loopa state known as aZenocondition. This example shows how to convert a 2D range measurement to a grid map. Thus a heading of0indicates that the robot is facing directly east. In other words, programming a simulated robot is analogous to programming a real robot.This is critical if the simulator is to be of any use to develop and evaluate different control software approaches. This script is a path planning code with state lattice planning. This is a path planning simulation with LQR-RRT*.

This is a 2D grid based coverage path planning simulation. A good general rule of thumb is one you probably know instinctively: If we are not making a turn, we can go forward at full speed, and then the faster we are turning, the more we should slow down. If we waited too long to measure the wheel tickers, both wheels could have done quite a lot, and it will be impossible to estimate where we have ended up. Instead of manually teaching every statement to a robot, you can write a script that calculates, records and simulates an entire robot program. However, to complicate matters, the environment of the robot may be strewn with obstacles. Thus, if we read 0.2 meters on sensor seven, we will assume that there is actually no obstacle in that direction. Thus, if this value shows a reading corresponding to 0.1 meters distance, we know that there is an obstacle 0.1 meters away, 75 degrees to the left. Learn how to add and edit routines, statements and positions in a robot program using Python API.

and explains the basics while trying not to overwhelm you.

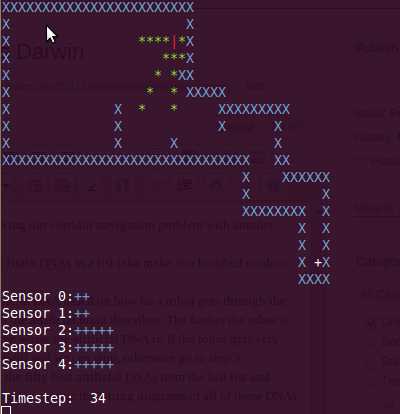

code repository python Laurie Kirkcaldy, You can set the goal position of the end effector with left-click on the plotting area. The robot bounces around aimlessly, but it never collides with an obstacle, and even manages to navigate some very tight spaces: So far weve described two behaviorsgo-to-goal and avoid-obstaclesin isolation. Now that we have our angular velocity, how do we determine our forward velocityv? Our robot usesodometryto estimate its pose. This is a 3d trajectory following simulation for a quadrotor. The cyan line is the target course and black crosses are obstacles. Now that our robot is able to generate a good estimate of the real world, lets use this information to achieve our goals. It has to include somehow a replacement ofv_max()with something proportional to the distance. Lets face it, robots are cool. Take a look at the Python code infollow_wall_controller.pyto see how its done. This proves to be a surprisingly difficult challenge for novice robotics programmers. Some even slither like a snake. More advanced robots make use of techniques such asmapping, to remember where its been and avoid trying the same things over and over;heuristics, to generate acceptable decisions when there is no perfect decision to be found; andmachine learning, to more perfectly tune the various control parameters governing the robots behavior. These measurements are used for PF localization. However, if the robot finds itself in a tight spot, dangerously close to a collision, it will switch to pure avoid-obstacles mode until it is a safer distance away, and then return to follow-wall. The API functionread_proximity_sensors()returns an array of nine values, one for each sensor. This is usually the basic feature that any mobile robot should have, from autonomous cars to robotic vacuum cleaners. To control the robot we want to program, we have to send a signal to the left wheel telling it how fast to turn, and a separate signal to the right wheel tellingithow fast to turn. The same concepts apply to the encoders. The goal of our software controlling this robot will be very simple: It will attempt to make its way to a predetermined goal point. That would allow you to quickly test and visualize your solution in simulation as well as refine the program and its logic. With our limited information, we cant say for certain whether it will be faster to go around the obstacle to the left or to the right. This is a 2D grid based the shortest path planning with Dijkstra's algorithm. The first thing to note is that, in this guide, our robot will be anautonomous mobile robot. In this course you learn how to read and write a robot program as well as control a robot using Python scripts. This is where the wheel tickers come in. The supreme purpose in our little robots existence in this programming tutorial is to get to the goal point.

N joint arm to a point control simulation. The specific features implemented refer to the Khepera III, but they can be easily adapted to the new Khepera IV. Determining the position and heading of the robot (together known as theposein robotics programming) is somewhat more challenging. Changes to the following all have profound effects on the simulated robots behavior: Weve done a lot of work to get to this point, and this robot seems pretty clever. As soon as the real world deviates from these assumptions, however, we will no longer be able to make good guesses, and control will be lost. What this system will tend to do when it encounters an obstacle is to turn away from it, then as soon as it has moved away from it, turn right back around and run into it again. Easy to read for understanding each algorithm's basic idea. Path tracking simulation with Stanley steering control and PID speed control. optimal paths for a car that goes both forwards and backwards. But it could be a good idea to have a separate Python thread running faster to catch smaller movements of the tickers. When an obstacle is encountered, turn away from it until it is no longer in front of us.

Lets get familiar with our simulated programmable robot. Jake Howard, Im joking of course, butonly sort of. Both perform their function admirably, but in order to successfully reach the goal in an environment full of obstacles, we need to combine them. How would this formula change? But there are many more advanced concepts that can be learned and tested quickly with a Python robot framework similar to the one we prototyped here.

But if you are curious, I will briefly introduce it here. So how do we make the wheels turn to get it there? Many advances in robotics come from observing living creatures and seeing how they react to unexpected stimuli. PythonRobotics documentation, All animation gifs are stored here: AtsushiSakai/PythonRoboticsGifs: Animation gifs of PythonRobotics, git clone https://github.com/AtsushiSakai/PythonRobotics.git, conda env create -f requirements/environment.yml, pip install -r requirements/requirements.txt. Learn how to teach a Delta robot to pick and place parts on the same conveyor using Python API. This is a list of user's comment and references:users_comments, Please check this document:How To Contribute PythonRobotics documentation, If you use this project's code for your academic work, we encourage you to cite our papers. This PRM planner uses Dijkstra method for graph search. If you are interested in other examples or mathematical backgrounds of each algorithm, You can check the full documentation online: Welcome to PythonRoboticss documentation! The step function is executed in a loop so thatrobot.step_motion()moves the robot using the wheel speed computed by the supervisor in the previous simulation step. What we need for our simple simulated robot is an easier solution: One more behavior specialized with the task of gettingaroundan obstacle and reaching the other side. LQR-RRT*: Optimal Sampling-Based Motion Planning with Automatically Derived Extension Heuristics, MahanFathi/LQR-RRTstar: LQR-RRT* method is used for random motion planning of a simple pendulum in its phase plot. Instead of asking, How fast do we want the left wheel to turn, and how fast do we want the right wheel to turn? it is more natural to ask, How fast do we want the robot to move forward, and how fast do we want it to turn, or change its heading? Lets call these parameters velocityvand angular (rotational) velocity(read omega). This is a 2D grid based the shortest path planning with A star algorithm. Antoine Petty, and This affects the choice of which robot programming languages are best to use: Usually, C++ is used for these kinds of scenarios, but in simpler robotics applications, Python is a very good compromise between execution speed and ease of development and testing. Often, once control is lost, it can never be regained. I hope you will considergetting involvedin the shaping of things to come. We will hint readers on how to improve the control framework of our robot with an additional check to avoid circular obstacles. There are a number of tutorials out there which might help you to learn to program in Python: Our tutorial, called Python: A whirlwind tour. This is a bipedal planner for modifying footsteps for an inverted pendulum. When both wheels turn at the same speed, the robot moves in a straight line. If the error in our heading is0, then the turning rate is also0. If we go forward while facing the goal, we will get there. The red cross is true position, black points are RFID positions. When your assumptions about the world are not correct, it can put you at risk of losing control of things. Path tracking simulation with iterative linear model predictive speed and steering control. Thus, controlling the movement of this robot comes down to properly controlling the rates at which each of these two wheels turn. If you want to create a different robot, you simply have to provide a different Python robot class that can be used by the same interface, and the rest of the code (controllers, supervisor, and simulator) will work out of the box! Your robot's video, which is using PythonRobotics, is very welcome!! When the wheels move at different speeds, the robot turns. Here is the final state diagram, which is programmed inside thesupervisor_state_machine.py: Here is the robot successfully navigating a crowded environment using this control scheme: An additional feature of the state machine that you can try to implement is a way to avoid circular obstacles by switching to go-to-goal as soon as possible instead of following the obstacle border until the end (which does not exist for circular objects!). Instead of running headlong into things in our way, lets try to program a control law that makes the robot avoid them. Every robot comes with different capabilities and control concerns. codey mblock (Hopefully.) there is a lot there and it may be a little overwhelming. However, constantly thinking in terms ofvLandvRis very cumbersome.

But if you are curious, I will briefly introduce it here. So how do we make the wheels turn to get it there? Many advances in robotics come from observing living creatures and seeing how they react to unexpected stimuli. PythonRobotics documentation, All animation gifs are stored here: AtsushiSakai/PythonRoboticsGifs: Animation gifs of PythonRobotics, git clone https://github.com/AtsushiSakai/PythonRobotics.git, conda env create -f requirements/environment.yml, pip install -r requirements/requirements.txt. Learn how to teach a Delta robot to pick and place parts on the same conveyor using Python API. This is a list of user's comment and references:users_comments, Please check this document:How To Contribute PythonRobotics documentation, If you use this project's code for your academic work, we encourage you to cite our papers. This PRM planner uses Dijkstra method for graph search. If you are interested in other examples or mathematical backgrounds of each algorithm, You can check the full documentation online: Welcome to PythonRoboticss documentation! The step function is executed in a loop so thatrobot.step_motion()moves the robot using the wheel speed computed by the supervisor in the previous simulation step. What we need for our simple simulated robot is an easier solution: One more behavior specialized with the task of gettingaroundan obstacle and reaching the other side. LQR-RRT*: Optimal Sampling-Based Motion Planning with Automatically Derived Extension Heuristics, MahanFathi/LQR-RRTstar: LQR-RRT* method is used for random motion planning of a simple pendulum in its phase plot. Instead of asking, How fast do we want the left wheel to turn, and how fast do we want the right wheel to turn? it is more natural to ask, How fast do we want the robot to move forward, and how fast do we want it to turn, or change its heading? Lets call these parameters velocityvand angular (rotational) velocity(read omega). This is a 2D grid based the shortest path planning with A star algorithm. Antoine Petty, and This affects the choice of which robot programming languages are best to use: Usually, C++ is used for these kinds of scenarios, but in simpler robotics applications, Python is a very good compromise between execution speed and ease of development and testing. Often, once control is lost, it can never be regained. I hope you will considergetting involvedin the shaping of things to come. We will hint readers on how to improve the control framework of our robot with an additional check to avoid circular obstacles. There are a number of tutorials out there which might help you to learn to program in Python: Our tutorial, called Python: A whirlwind tour. This is a bipedal planner for modifying footsteps for an inverted pendulum. When both wheels turn at the same speed, the robot moves in a straight line. If the error in our heading is0, then the turning rate is also0. If we go forward while facing the goal, we will get there. The red cross is true position, black points are RFID positions. When your assumptions about the world are not correct, it can put you at risk of losing control of things. Path tracking simulation with iterative linear model predictive speed and steering control. Thus, controlling the movement of this robot comes down to properly controlling the rates at which each of these two wheels turn. If you want to create a different robot, you simply have to provide a different Python robot class that can be used by the same interface, and the rest of the code (controllers, supervisor, and simulator) will work out of the box! Your robot's video, which is using PythonRobotics, is very welcome!! When the wheels move at different speeds, the robot turns. Here is the final state diagram, which is programmed inside thesupervisor_state_machine.py: Here is the robot successfully navigating a crowded environment using this control scheme: An additional feature of the state machine that you can try to implement is a way to avoid circular obstacles by switching to go-to-goal as soon as possible instead of following the obstacle border until the end (which does not exist for circular objects!). Instead of running headlong into things in our way, lets try to program a control law that makes the robot avoid them. Every robot comes with different capabilities and control concerns. codey mblock (Hopefully.) there is a lot there and it may be a little overwhelming. However, constantly thinking in terms ofvLandvRis very cumbersome. This is a 2D object clustering with k-means algorithm. Black points are landmarks, blue crosses are estimated landmark positions by FastSLAM. We will now enter into the core of our control software and explain the behaviors that we want to program inside the robot. These can include anything from proximity sensors, light sensors, bumpers, cameras, and so forth. Because of the way the infrared sensors work (measuring infrared reflection), the numbers they return are a non-linear transformation of the actual distance detected. Thus,vis a function of. It compares this state to areferencevalue of what itwantsthe state to be (for the distance, it wants it to be zero), and calculates the error between the desired state and the actual state. If there is no obstacle, the sensor will return a reading of its maximum range of 0.2 meters. corridor frankenstein github through The sensor gains used by the avoid-obstacles controller, The obstacle standoff distance used by the follow-wall controller.